Professor Nigel W. John FEG FLSW

Home Research About Contact

Augmented Reality for Anatomical Education (2006-2010)

The traditional use of cadaver dissections as a means of teaching gross anatomy has decreased markedly over recent decades. We wanted to discover if it is possible to digitally recreate the learning experience of cadaver dissection. The resulting BARETA system combines AR technology, displaying volume and surface renderings of medical datasets, with anatomically correct models produced using Rapid Prototyping (RP) technology, to provide the student with stimulation for touch as well as sight (Thomas, John, & Delieu, 2010).

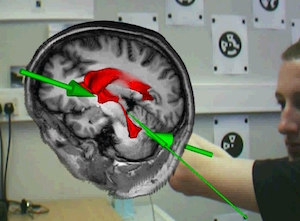

This project was undertaken by my PhD student Rhys Thomas. Thomas, R. G. (2009). A Tangible Augmented Reality Anatomy Teaching Tool. PhD Thesis, Bangor University. The example scenario that we developed is for an anatomy lesson on the human brain. A RP model of the ventricles (located in the centre of the brain) was segmented from volumetric data. Both the ventricles model and the user’s viewpoint were tracked using separate magnetic tracking sensors. On screen, or through a head mounted display, volume renderings of the brain and surrounding anatomy can be superimposed on top of a video feed of the real world environment. The effect delivered to the user is that they can pick up virtual tissues and examine them in their hands. Labelling and other visualizations can be included as required.

Rhys is currently a Research officer at Leeds University.

Further Reading

Winner of 2010 Informa Healthcare Award for best paper.

- Thomas, R. G., John, N. W., & Delieu, J. M. (2010). Augmented reality for anatomical education. Journal of Visual Communication in Medicine, 33(1), 6–15.